May 20, 2025 by Okinawa Institute of Science and Technology

Collected at: https://techxplore.com/news/2025-05-peak-efficiency-optimizing-tutelage.html

The information age is built on mathematics. From finding the best route between two points, predicting the future load on a national power grid or tomorrow’s weather, to identifying ideal treatment options for diseases, algorithms share a common structure: they take input data, process it through a series of calculations, and deliver an output.

Powering the ongoing AI revolution are increasingly sophisticated algorithms, often composed of millions of lines of code. And the more steps a model goes through before presenting a solution, the costlier it is in the number of physical computing units, time, and energy required.

Optimizing these mathematical models is at the heart of the work of the Machine Learning and Data Science Unit (MLDS) at the Okinawa Institute of Science and Technology (OIST). Led by Professor Makoto Yamada, the unit strives to unlock the full potential of machine learning (ML) and improve efficiency, optimizing not just data science but also education and the scholarly output within the unit through a distributed hierarchy.

Reducing costs

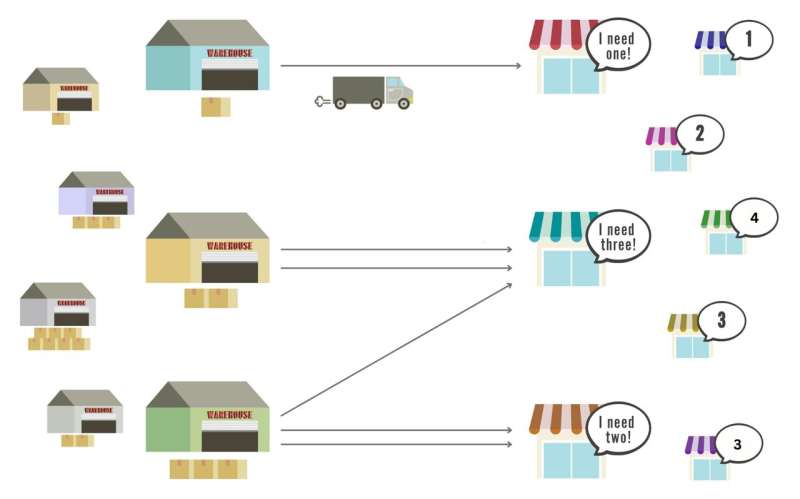

Imagine you are tasked with moving goods from several warehouses to different stores. Each warehouse has a different quantity of goods available, and each store requires a different number of goods. To minimize transportation costs, you need to figure out the most efficient route between the different warehouses and stores that brings down the total distance required for moving all the needed goods.

This is the basic setup of Optimal Transport (OT) problems, which, while simple on the surface, are challenging at scale: as the number of distribution points (warehouses and stores) increases, so too does the complexity—and cost—of the algorithm.

“We are focused on optimizing and designing new tools for solving Optimal Transport problems,” says Prof. Yamada. OT is central to data science as a way of discovering the most efficient method of moving data between distribution points.

Consider single cell gene expression data as an example: “This data can be very highly dimensional with a large number of samples—we might investigate 20,000 genes with 100,000 cells. Computing the relationship between each gene in the context of drug discovery or disease classification is extremely complex, especially if we want to train ML on the data.

“One of our goals is to accurately solve OT in such cases with linear complexity: a computational cost that only scales by the number of distribution points.”

For the International Conference on Learning Representations (ICLR 2025), five papers from the MLDS were accepted. Two of these feature models that focus specifically on bringing down OT cost in computationally expensive ML. Both papers are available on the arXiv preprint server.

One paper introduces an OT method that better captures class relationships by comparing full feature distributions instead of simple averages, improving both accuracy and efficiency.

The second paper addresses the challenge of efficient unsupervised learning on unlabeled data. In this setting, ML models must learn both the structure of the data (how each feature and sample are related) and the rules for measuring similarity, here quantified through Wasserstein distance, which captures the minimal effort needed to morph one data distribution into another.

To overcome the prohibitive cost associated with computing this, the team introduces a novel method based on tree-Wasserstein distance, where instead of calculating distances across a full grid of all the distribution points, both samples (like cells) and features (like genes) are mapped onto nodes in a branching tree, where any two nodes are connected by exactly one path. This structure drastically reduces the number of comparisons required, thereby vastly improving the computational cost.

Expanding accessibility

Another key focus of the unit is improving the reliability and safety of models by reducing errors and the potential for harm during model development, use, and output. One challenge here is open set recognition, which refers to how the inputs that a model may encounter after training are messy and are often entirely irrelevant.

Dr. Mohammad Sabokrou, who leads the unit’s research on trustworthy ML, explains, “If you’re using an ML model to detect different types of cars from images, and you give it a photo of a tiger, it should know that it cannot classify the image—it’s problematic if it confidently classifies objects it shouldn’t be able to.”

Teaching a model to draw this boundary while recognizing known objects in unusual configurations, like a car viewed from different angles, is difficult. Detecting samples that are irrelevant or dissimilar to the training data, also known as out-of-distribution samples, is generally framed as an anomaly detection problem.

One approach that the unit is exploring here is contrastive learning, where a model is taught to pull similar inputs together and push dissimilar (i.e., anomaly) ones apart in a feature space.

“Anomaly detection is closely related to tasks such as novelty detection, irregularity detection, open-set recognition, and out-of-distribution detection. While these tasks are similar in nature, they differ primarily in their testing settings,” says Dr. Sabokrou.

“We are working to unify the metrics across these different task types, which would allow for much greater knowledge sharing.”

The unit also works to improve trustworthy models through various attacks that expose their vulnerabilities. Adversarial attacks use subtle tweaks to provoke errors.

Backdoor attacks exploit hidden triggers in the training data, whether deliberately introduced or unintentionally inherited through spurious correlations or societal bias: a model might wrongly infer family ties in photos based on lighting or pick up bias against underrepresented groups from non-inclusive training sets.

And finally, membership inference attacks test whether a given input was in a model’s training set to detect or exploit data leakage, with major implications for privacy and safety.

These attacks can reveal if a cancer screening model mistakenly learns from artifacts like image scale bars, or if generative models reproduce copyrighted material. Together, these strategies provide a powerful diagnostic to improve the safety and reliability of AI systems.

Promoting growth

Data science is at the foundation of most scientific fields, and as such, improving the methods by which researchers can extract knowledge from data improves the efficiency of the scientific process. And with the explosion in general AI usage, reducing computational cost and maximizing model safety and reliability have become all the more important.

The principle of efficiency permeates the unit, which is characterized by a flat hierarchy and a distributed approach to mentorship. For one, Prof. Yamada encourages unit members to be corresponding authors of their papers: “it’s good for your career and for your learning. That is why it is often our post-docs who take on this role, so that they get the experience.”

In the same vein, mentorship is delegated across the unit, rather than centered on Prof. Yamada, with post-docs and staff scientists usually tasked with directly supervising graduate students and interns—though Prof. Yamada remains within reach of everyone, and he keeps tabs on every project.

“It’s much more efficient to learn by doing. Plus, I like to talk,” he says. Similarly, Dr. Sabokrou is motivated by collaboration, working closely together with interns, companies, former colleagues, and external researchers from around the world. “You naturally build a network through your academic career and helping each other out diffuses knowledge and contributes to progress.”

The flat, high-trust unit culture pays off, with four of the five papers accepted for ICLR 2025 being penned by interns. And by taking on a steady stream of university interns and committing resources to various science outreach activities—such as an on-going math cafe for Okinawan junior high schoolers and the popular Machine Learning Summer School, which last year saw more than 200 participants from across the world—Prof. Yamada and the unit enacts their commitment to science through their foundational work.

As he says, “education is the best, long-term investment for both science and society. Our goal is to maximize the efficiency of that investment.”

More information: Siqi Zeng et al, Learning Structured Representations by Embedding Class Hierarchy with Fast Optimal Transport, arXiv (2024). DOI: 10.48550/arxiv.2410.03052

Kira M. Düsterwald et al, Fast unsupervised ground metric learning with tree-Wasserstein distance, arXiv (2024). DOI: 10.48550/arxiv.2411.07432

Journal information: arXiv

Awesome blog you have here but I was curious if you knew of any discussion boards that cover the same topics talked about in this article? I’d really love to be a part of online community where I can get advice from other knowledgeable individuals that share the same interest. If you have any suggestions, please let me know. Thanks!

My coder is trying to convince me to move to .net from PHP. I have always disliked the idea because of the costs. But he’s tryiong none the less. I’ve been using Movable-type on numerous websites for about a year and am concerned about switching to another platform. I have heard fantastic things about blogengine.net. Is there a way I can import all my wordpress content into it? Any kind of help would be really appreciated!

Please let me know if you’re looking for a article author for your weblog. You have some really great articles and I believe I would be a good asset. If you ever want to take some of the load off, I’d really like to write some articles for your blog in exchange for a link back to mine. Please shoot me an e-mail if interested. Kudos!

I must show my appreciation to you for rescuing me from this particular matter. Because of surfing around throughout the world-wide-web and obtaining notions that were not helpful, I assumed my entire life was gone. Existing devoid of the answers to the problems you have fixed through your short article is a crucial case, as well as those that would have badly affected my career if I had not encountered your web blog. The skills and kindness in touching the whole lot was very helpful. I’m not sure what I would’ve done if I hadn’t come upon such a stuff like this. I am able to now look ahead to my future. Thanks for your time very much for this expert and amazing help. I won’t be reluctant to recommend the sites to any person who requires guide on this situation.

Hey there would you mind stating which blog platform you’re working with? I’m going to start my own blog soon but I’m having a difficult time choosing between BlogEngine/Wordpress/B2evolution and Drupal. The reason I ask is because your layout seems different then most blogs and I’m looking for something completely unique. P.S My apologies for being off-topic but I had to ask!

Leave a Reply