January 12, 2026 by Amanda Norvelle, Texas A&M University

Collected at: https://techxplore.com/news/2026-01-efficient-ai.html

Training artificial intelligence models is costly. Researchers estimate that training costs for the largest frontier models will exceed $1 billion by 2027. Costs are incurred through hardware, including large data centers, energy needs and salaries for research and development staff. The massive training price tag limits the labs and researchers that can afford to work with this technology.

Research from Dr. Tianbao Yang in Texas A&M University’s Department of Computer Science and Engineering may level the playing field.

“Data is the fuel of machine learning. If you want to train a model, we need a lot of computer resources,” Yang said. “If we can reduce the data and computer resources, it could save a lot of money.”

Yang, the Stephen Horn ’79 Engineering Excellence Chair, focuses on efficiently training AI models. His recent research began when he sought a way to capitalize on open-source AI models. Could he use these existing models to train new ones more efficiently on custom datasets?

This question led Yang and his colleagues to use Open AI’s contrastive language-image pretraining (CLIP) model to train a CLIP model of their own. CLIP models are a family of AI models that bring natural language and images into the same space. They can, for example, help generate an image from a text description.

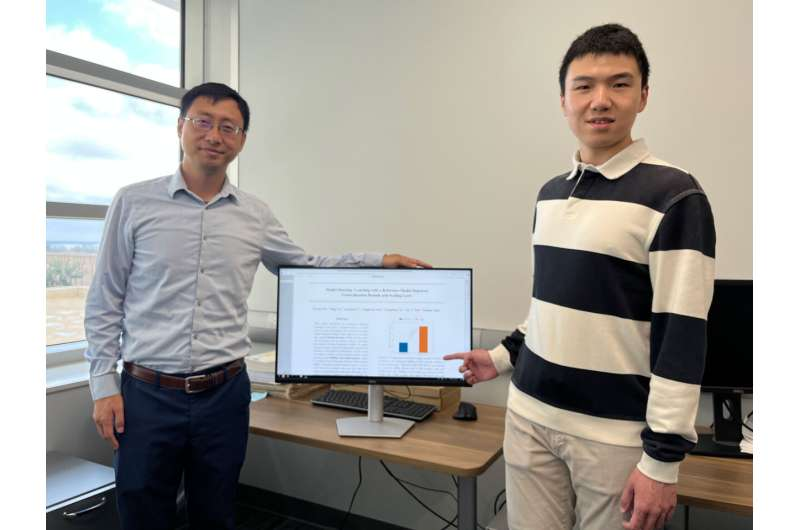

Dr. Tianbao Yang and graduate student Xiyuan Wei developed a framework for model steering called DRRho risk minimization, which gives a higher weight to higher-quality data. Identifying and focusing on the most important data means that less high-quality data is necessary, making training more efficient. Prior research delves into data weighting, but Yang’s work formalizes the process. Credit: Dr. Tianbao Yang / Texas A&M Engineering

“My kids love to do that,” Yang said. “They’ll ask ChatGPT to draw an image of a dinosaur.”

Open AI was the first to develop a CLIP model, and subsequent models that combine images and text are now considered part of that group. Yang and his team decided to start their research here, but their proposed training framework is general and can be applied to various model types.

Ultimately, Yang’s target model outperformed Open AI’s CLIP model, and it requires fewer graphics processing units (GPUs)—less processing power—for training. The researchers have publicly released the code for their DRRho-CLIP.

“Compared to Open AI’s solution, we can reduce the data size by half and still get a better performance,” Yang said. “We can reduce the computing budget by more than 15 times, and in our experiments, we used two days of training on eight GPUs compared to their 12 days of training on 256 GPUs.”

A method called knowledge distillation—where a powerful teacher model distills its knowledge to a student model—is often used for training. However, Yang’s work falls under a new training paradigm called model steering, which allows for using a weaker model to help train a stronger model.

“I don’t need those open-source models to be very strong teachers,” Yang said. “They can be weak and I can still train a stronger one.”

Yang and his graduate student Xiyuan Wei developed a framework for model steering called DRRho risk minimization, which gives a higher weight to higher-quality data. Identifying and focusing on the most important data means that less high-quality data is necessary, making training more efficient. Prior research delves into data weighting, but Yang’s work formalizes the process.

In addition to providing a framework for model steering, Yang’s team has empirical evidence and the theory to back it up. It was challenging to attain both.

“It’s easy to conduct some experiments; industry professionals do this all the time, and there are many great researchers working in theory. But the uniqueness of this work is that we bridge the gap between theory and practice,” Yang said. “With our framework, there is theory to guarantee, so everyone can repeat our experiments with guaranteed improvements.”

In the future, Yang hopes to apply this model steering framework to a reasoning model—a type of large language model (LLM) that solves more complex problems, such as math theorems. Collaborators on this project include Oracle, Indiana University, Google and the University of Florida.

More information: The researchers have publicly released the code for their DRRho-CLIP.

Leave a Reply