December 23, 2025 by University of Manchester

Collected at: https://techxplore.com/news/2025-12-lowering-barriers-ai-technique-llms.html

Large language models (LLMs) such as GPT and Llama are driving exceptional innovations in AI, but research aimed at improving their explainability and reliability is constrained by massive resource requirements for examining and adjusting their behavior.

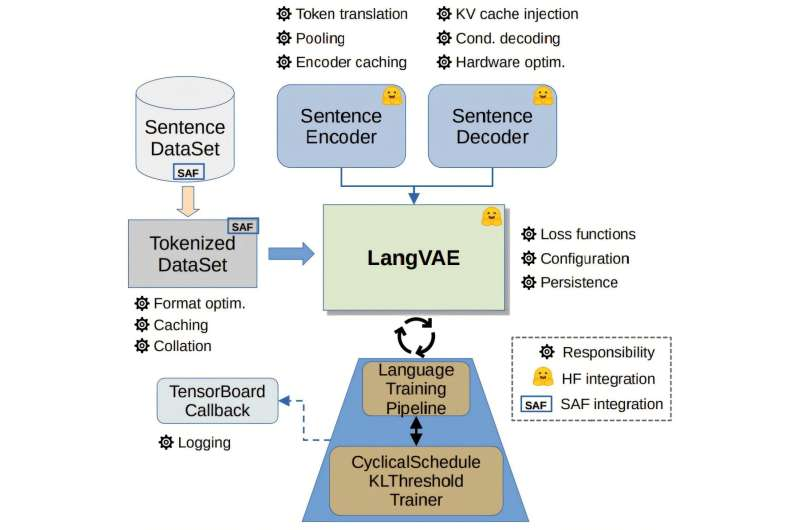

To tackle this challenge, a Manchester research team led by Dr. Danilo S. Carvalho and Dr. André Freitas have developed new software frameworks—LangVAE and LangSpace—that significantly reduce both hardware and energy resource needs for controlling and testing LLMs to build explainable AI. Their paper is published on the arXiv preprint server.

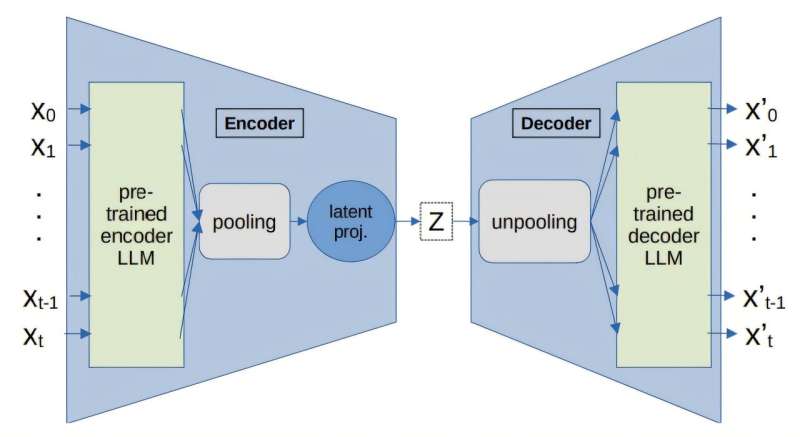

Their technique builds compressed language representations from LLMs, making it possible to interpret and control these models using geometric methods (essentially treating the model’s internal language patterns as points and shapes in space that can be measured, compared and adjusted), without altering the models themselves. Crucially, their approach reduces computer resource usage by more than 90% compared with previous techniques.

This leap in efficiency lowers the barriers to entry for developing explainable and controllable AI, opening the door for more researchers, startups and industry teams to explore how these powerful systems work.

Overview of the LangVAE framework. Credit: arXiv (2025). DOI: 10.48550/arxiv.2505.00004

Dr. Carvalho explains, “We have significantly lowered entry barriers for development and experimentation of explainable and controllable AI models and also hope to reduce the environmental impact of these research efforts.

“Our vision is to accelerate the development of trustable and reliable AI for mission-critical applications, such as health care.”

More information: Danilo S. Carvalho et al, LangVAE and LangSpace: Building and Probing for Language Model VAEs, arXiv (2025). DOI: 10.48550/arxiv.2505.00004

Journal information: arXiv

Leave a Reply