By Northwestern University December 11, 2025

Collected at: https://scitechdaily.com/scientists-teach-the-brain-to-read-light-as-a-new-sense/

Scientists have created a soft wireless implant that uses tiny flashes of light to send information straight into the brain, allowing animals to learn brand-new artificial signals. By lighting up specific patterns across the cortex, the system teaches the brain to interpret these flashes as meaningful cues that guide decisions and behavior.

Researchers at Northwestern University have introduced a major advance in neurobiology and bioelectronics by creating a wireless device that uses light to transmit information straight into the brain. The approach moves around the body’s traditional sensory pathways and instead interacts with neurons directly.

The system is soft and flexible and fits beneath the scalp while resting on the skull. From this position, it can project carefully programmed light patterns through the bone to stimulate neurons across large areas of the cortex.

Light-Based Signaling Teaches the Brain New Information

During testing, scientists used tiny bursts of patterned light to activate specific groups of neurons in mouse models. (These neurons are genetically modified to respond to light.) The mice quickly learned that certain light patterns represented meaningful cues and used them to guide behavior. Even though no normal senses were involved, the animals used these artificial signals to make decisions and complete behavioral challenges.

Researchers see broad potential for this approach. It could eventually support prosthetic limbs by supplying sensory feedback, deliver new types of artificial input for future hearing or vision devices, help manage pain without drugs, enhance recovery after injury or stroke, and even support brain-controlled robotic limbs.

The study was published on December 8 in Nature Neuroscience.

A New Way to Create and Study Brain Signals

“Our brains are constantly turning electrical activity into experiences, and this technology gives us a way to tap into that process directly,” said Northwestern neurobiologist Yevgenia Kozorovitskiy, who led the experimental work. “This platform lets us create entirely new signals and see how the brain learns to use them. It brings us just a little bit closer to restoring lost senses after injuries or disease while offering a window into the basic principles that allow us to perceive the world.

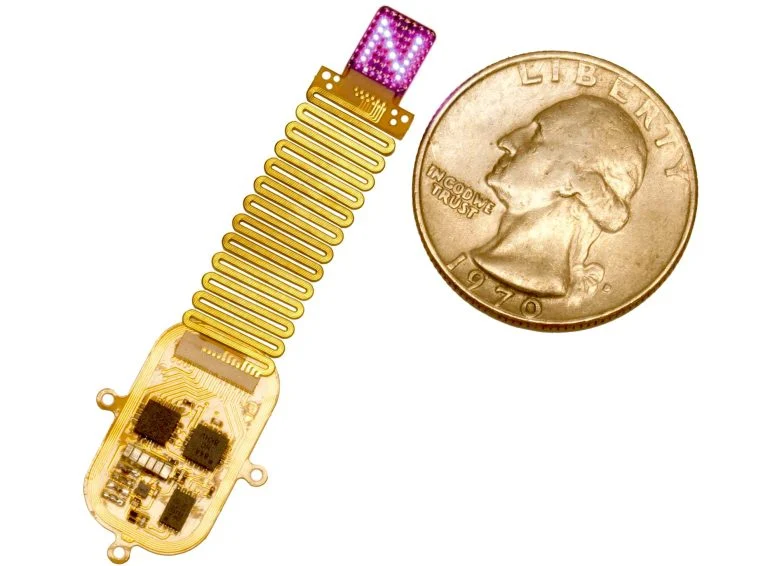

“Developing this device required rethinking how to deliver patterned stimulation to the brain in a format that is both minimally invasive and fully implantable,” said Northwestern bioelectronics pioneer John A. Rogers, who led the technology development. “By integrating a soft, conformable array of micro-LEDs — each as small as a single strand of human hair — with a wirelessly powered control module, we created a system that can be programmed in real time while remaining completely beneath the skin, without any measurable effect on natural behaviors of the animals. It represents a significant step forward in building devices that can interface with the brain without the need for burdensome wires or bulky external hardware. It’s valuable both in the immediate term for basic neuroscience research and in the longer term for addressing health challenges in humans.”

Kozorovitskiy holds the Irving M. Klotz Professorship of Neurobiology at Northwestern’s Weinberg College of Arts and Sciences and is a member of the Chemistry of Life Processes Institute. Rogers serves as the Louis Simpson and Kimberly Querrey Professor of Materials Science and Engineering, Biomedical Engineering and Neurological Surgery in the McCormick School of Engineering and the Feinberg School of Medicine. He also directs the Querrey Simpson Institute for Bioelectronics. The study’s first author is postdoctoral researcher Mingzheng Wu.

Building on Earlier Optogenetics Innovations

The project expands on previous work in which Kozorovitskiy and Rogers created the first fully implantable, programmable, wireless and battery-free device capable of controlling neurons with light. Published in Nature Neuroscience in 2021, that earlier system used a single micro-LED probe to influence social behavior in mice. Traditional optogenetics required fiberoptic cables that limited an animal’s movement, but the wireless design allowed more natural behavior.

The new version deepens this capability by allowing more complex and flexible communication with the brain. Instead of controlling only one small region, the updated device contains a fully programmable array of up to 64 micro-LEDs. Each light can be adjusted in real time, making it possible to send intricate sequences that resemble the distributed patterns seen during real sensory experiences. Because natural perception involves many cortical regions working together, not isolated clusters of neurons, this array produces more realistic activity.

“In the first paper, we used a single micro-LED,” Wu said. “Now we’re using an array of 64 micro-LEDs to control the pattern of cortical activity. The number of patterns we can generate with various combinations of LEDs — frequency, intensity and temporal sequence — is nearly infinite.”

Softer Design With Skull-Level Light Delivery

Although more advanced, the device remains small and lightweight. It is about the size of a postage stamp and thinner than a credit card. Unlike earlier probes that extended into the brain, this model rests on the skull surface and sends light through the bone.

“Red light penetrates tissues quite well,” Kozorovitskiy said. “It reaches deep enough to activate neurons through the skull.”

Teaching Animals to Recognize Artificial Brain Codes

For testing, researchers used mice engineered to have light-responsive neurons in the cortex. The animals were trained to connect a specific stimulation pattern with a reward, which was usually found at a particular port in a behavioral chamber.

In repeated trials, the implant delivered a defined pattern across four cortical regions, functioning like a coded signal. The mice learned to distinguish this target pattern from many other possible sequences. When they detected the correct one, they navigated to the appropriate port to claim a reward.

“By consistently selecting the correct port, the animal showed that it received the message,” Wu said. “They can’t use language to tell us what they sense, so they communicate through their behavior.”

Future Work on Artificial Perception Systems

With evidence that the brain can understand patterned light stimulation as meaningful information, the team plans to test more complicated sequences and determine how many different patterns the brain can learn to interpret. Future versions of the device may incorporate more LEDs, smaller spacing between them, larger coverage across the cortex and new wavelengths that reach deeper brain regions.

Reference: “Patterned wireless transcranial optogenetics generates artificial perception” by Mingzheng Wu, Yiyuan Yang, Jinglan Zhang, Andrew I. Efimov, Xiuyuan Li, Kaiqing Zhang, Yue Wang, Kevin L. Bodkin, Mohammad Riahi, Jianyu Gu, Glingna Wang, Minsung Kim, Liangsong Zeng, Jiaqi Liu, Lauren H. Yoon, Haohui Zhang, Sara N. Freda, Minkyu Lee, Jiheon Kang, Joanna L. Ciatti, Kaila Ting, Stephen Cheng, Xincheng Zhang, He Sun, Wenming Zhang, Yi Zhang, Anthony Banks, Cameron H. Good, Julia M. Cox, Lucas Pinto, Abraham Vázquez-Guardado, Yonggang Huang, Yevgenia Kozorovitskiy and John A. Rogers, 8 December 2025, Nature Neuroscience.

DOI: 10.1038/s41593-025-02127-6

The study, “Patterned wireless transcranial optogenetics generates artificial perception,” was supported by the Querrey Simpson Institute of Bioelectronics, NINDS/BRAIN Initiative, National Institute of Mental Health, One Mind Nick LeDeit Rising Star Research Award, Kavli Exploration Award, Shaw Family Pioneer Award, Simons Foundation, Alfred P. Sloan Foundation and Christina Enroth-Cugell and David Cugell Fellowship.

Leave a Reply