November 29, 2025 by Ingrid Fadelli, Phys.org

Collected at: https://phys.org/news/2025-11-humans-artificial-neural-networks-similar.html

Past psychology and behavioral science studies have identified various ways in which people’s acquisition of new knowledge can be disrupted. One of these, known as interference, occurs when humans are learning new information and this makes it harder for them to correctly recall knowledge that they had acquired earlier.

Interestingly, a similar tendency was also observed in artificial neural networks (ANNs), computational models inspired by biological neurons and the connections between them. In ANNs, interference can manifest as so-called catastrophic forgetting, a process via which models “unlearn” specific skills or information after they are trained on a new task.

In some other instances, knowledge acquired in the past can instead help humans or ANNs to learn how to complete a new task. This phenomenon, known as “transfer,” entails the application of existing knowledge of skills to a novel task or problem.

Researchers at University of Oxford recently carried out a study comparing how people and ANNs learn to complete tasks that require them to follow specific logical rules. Their findings, published in Nature Human Behavior, uncovered similar interference and transfer patterns in the computational models and in humans, while also identifying two broad categories of human learners that exhibit distinct learning patterns.

“In ANNs, acquiring new knowledge often interferes with existing knowledge,” wrote Eleanor Holton, Lukas Braun and their colleagues in their paper. “Although it is commonly claimed that humans overcome this challenge, we find surprisingly similar patterns of interference across both types of learner.”

Comparing how people and ANNs learn rule-based tasks

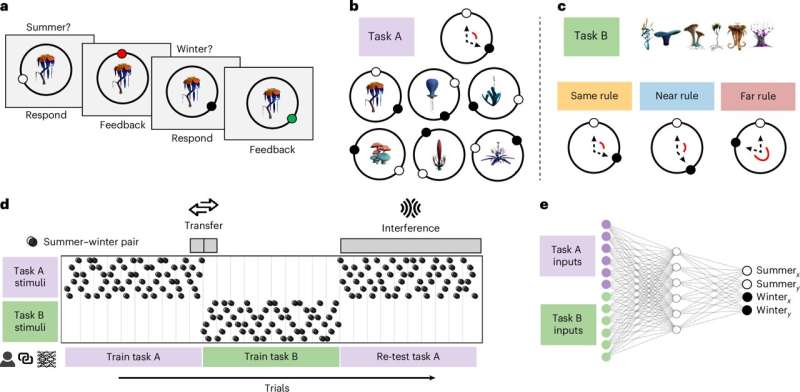

As part of their study, Holton, Braun and their colleagues tested humans and neural networks on tasks that required them to learn and follow specific logical rules. These are clear, explicit rules, such as pressing one key if a shape is blue, and another key if it’s red, or sorting objects into groups based on their shape.

The tasks used by the researchers were organized in an A-B-A sequence. This means that the first task required participants to follow one rule (A), the next another rule (B), and the following rule followed in the first task (A).

“When learning sequential rule-based tasks (A–B–A), both learners benefit more from prior knowledge when the tasks are similar—but as a result, they also exhibit greater interference when retested on task A,” wrote the authors. “In networks, this arises from reusing previously learned representations, which accelerates new learning at the cost of overwriting prior knowledge.”

Essentially, Holton and her colleagues found that when the rules of the tasks they used were similar, both humans and ANNs tended to transfer the knowledge learned in the first task to the second. However, many then exhibited interference effects when asked to switch back to the first rules that they had learned, during the third sub-task.

‘Lumpers’ and ‘splitters’

Interestingly, the researchers also found that humans tended to exhibit one of two distinct patterns during the experiment. Some people exhibited high transfer and high interference, while others exhibited low transfer and low interference. The team dubbed these two categories of learners ‘lumpers’ and ‘splitters.’

“In humans, we observe individual differences: one group (‘lumpers’) shows more interference alongside better transfer, while another (‘splitters’) avoids interference at the cost of worse transfer,” wrote Holton, Braun and their colleagues. “These behavioral profiles are mirrored in neural networks trained in the rich (lumper) or lazy (splitter) regimes, encouraging overlapping or distinct representations respectively.”

Overall, the team’s findings suggest that when they are acquiring different knowledge in a sequence, humans and AI algorithms can fall into similar patterns that either disrupt or support their learning. In the future, the insight they gathered could inform the advancement of ANNs, while also potentially improving the present understanding of how humans acquire and retain knowledge.

More information: Eleanor Holton et al, Humans and neural networks show similar patterns of transfer and interference during continual learning, Nature Human Behaviour (2025). DOI: 10.1038/s41562-025-02318-y

Journal information: Nature Human Behaviour

Leave a Reply