November 26, 2025 by The Korea Advanced Institute of Science and Technology (KAIST)

Collected at: https://techxplore.com/news/2025-11-visualizing-internal-ai-decision.html

Although deep learning–based image recognition technology is rapidly advancing, it still remains difficult to clearly explain the criteria AI uses internally to observe and judge images. In particular, technologies that analyze how large-scale models combine various concepts (e.g., cat ears, car wheels) to reach a conclusion have long been recognized as a major unsolved challenge.

Professor Jaesik Choi’s research team at the Kim Jaechul Graduate School of AI developed a new, explainable AI (XAI) technology that visualizes the concept-formation process inside a model at the level of circuits, enabling humans to understand the basis on which AI makes decisions.

The study is evaluated as a significant step forward that allows researchers to structurally examine “how AI thinks.” The study, with Ph.D. candidates Dahee Kwon and Sehyun Lee from KAIST Kim Jaechul Graduate School of AI as co–first authors, was presented on October 21 at the International Conference on Computer Vision (ICCV 2025).

How neurons and circuits work in AI

Inside deep learning models, there exist basic computational units called neurons, which function similarly to those in the human brain. Neurons detect small features within an image—such as the shape of an ear, a specific color, or an outline—and compute a value (signal) that is transmitted to the next layer.

In contrast, a circuit refers to a structure in which multiple neurons are connected to jointly recognize a single meaning (concept). For example, to recognize the concept of a cat ear, neurons detecting outline shapes, neurons detecting triangular forms, and neurons detecting fur-color patterns must activate in sequence, forming a functional unit (circuit).

Moving from neuron-centric to circuit-centric explanation

Up until now, most explanation techniques have taken a neuron-centric approach based on the idea that “a specific neuron detects a specific concept.” However, in reality, deep learning models form concepts through cooperative circuit structures involving many neurons. Based on this observation, the KAIST research team proposed a technique that expands the unit of concept representation from “neuron → circuit.”

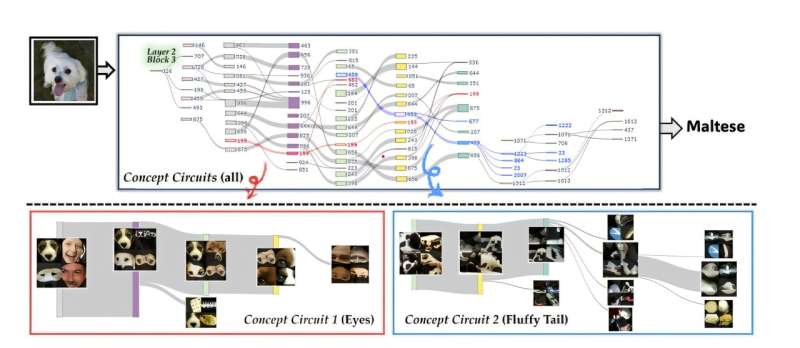

The research team’s newly developed technology, Granular Concept Circuits (GCC), is a novel method that analyzes and visualizes how an image-classification model internally forms concepts at the circuit level.

How GCC technology works and its impact

GCC automatically traces circuits by computing Neuron Sensitivity and Semantic Flow. Neuron Sensitivity indicates how strongly a neuron responds to a particular feature, while Semantic Flow measures how strongly that feature is passed on to the next concept. Using these metrics, the system can visualize, step-by-step, how basic features such as color and texture are assembled into higher-level concepts.

The team conducted experiments in which specific circuits were temporarily disabled (ablation). As a result, when the circuit responsible for a concept was deactivated, the AI’s predictions actually changed. In other words, the experiment directly demonstrated that the corresponding circuit indeed performs the function of recognizing that concept.

Significance and future applications of the research

This study is regarded as the first to reveal, at a fine-grained circuit level, the actual structural process by which concepts are formed inside complex deep learning models. Through this, the research suggests practical applicability across the entire explainable AI (XAI) domain—including strengthening transparency in AI decision-making, analyzing the causes of misclassification, detecting bias, improving model debugging and architecture, and enhancing safety and accountability.

The research team stated, “This technology shows the concept structures that AI forms internally in a way that humans can understand. This study provides a scientific starting point for researching how AI thinks.”

Professor Choi emphasized, “Unlike previous approaches that simplified complex models for explanation, this is the first approach to precisely interpret the model’s interior at the level of fine-grained circuits,” and added, “We demonstrated that the concepts learned by AI can be automatically traced and visualized.”

More information: Paper: Granular Concept Circuits: Toward a Fine-Grained Circuit Discovery for Concept Representations

Leave a Reply