April 16, 2025 by Jeff Shepard

Collected at: https://www.eeworldonline.com/what-determines-the-size-of-the-dataset-needed-to-train-an-ai/

Large data sets are needed to train artificial intelligence (AI) algorithms, and they can be expensive. So, how much data is enough? The complexity of the problem, the complexity of the model, the quality of the data, and the required level of accuracy primarily determine that.

Data augmentation techniques can increase the size of a dataset, and learning curve analysis can determine when training results have been optimized.

Problem complexity is a major factor in the size of the required dataset. Image recognition is complex and requires a larger training dataset than simple image classification. In addition, problems with more features need more training examples to learn all the possible relationships.

Model complexity is also important, and a deep learning model with more parameters can require a very large dataset for effective learning. A common rule of thumb is the “rule of 10,” which states that effective training requires 10x more data points than the number of parameters in the model.

Data quality and augmentation

Data with minimal noise or inconsistencies is “high quality” training data. It can be difficult to obtain large quantities of high-quality data, but smaller datasets can be augmented to artificially increase the dataset’s size.

Argumentation can be used with all types of data. Even seemingly small changes are sufficient. For example, effective forms of augmentation for a dataset of images can include cropping, reflection, rotation, scaling, translation, or adding Gaussian noise, as shown in Figure 1.

Underfitting and overfitting

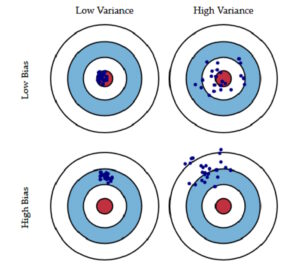

Bias and variance metrics can be used to determine the quality of an AI/ML model. Bias is the prediction error related to a model that’s too simple (also called underfitting), while a high variance indicates that the model is too complex (overfitting) and considers “noise” in the dataset in addition to the data itself.

The ideal model has low bias and low variance. The two metrics can be considered independent, as shown in Figure 2. However, in the case of AI/ML models, they tend to be inversely proportional, and increasing one leads to a decrease in the other. That’s called the “bias-variance tradeoff” and is an important consideration in learning curve analysis when determining the success of model training.

Epochs and learning curve analysis

An epoch represents a complete cycle of training an AI/ML model using a given dataset. Epochs are also used in learning curve analysis to determine the optimal number of training cycles.

Learning curve analysis is important because the required number of epochs can reach the thousands. However, using more epochs to “refine” the results is not better since training for too many epochs results in overfitting.

The learning curve plots the amount of data (usually epochs) on the x-axis and the model’s accuracy (or other performance metric) on the y-axis. Learning curve analysis compares results from the training with a set of validation data. The validation data can be an independent dataset or a subset of the training dataset not used for training.

Analysis limitations

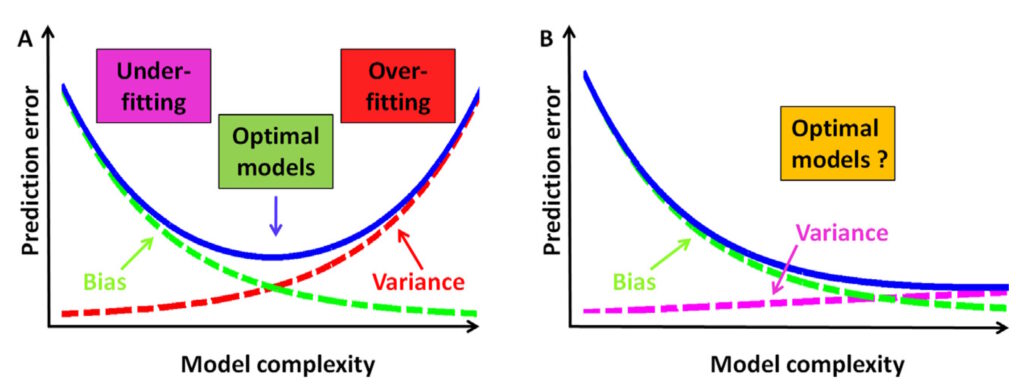

Not all models have the same relationship between bias and variance. That can make it challenging to identify an optimal model.

Generally, an optimal model can be identified when the combined bias and variance reach a global minimum, as in Figure 3a. For some models, variance may increase slower than the decrease in bias (Figure 3b), and identifying the optimal model may not be as simple. In those cases, a new or refined model may provide improved results.

Summary

The “rule of 10” can provide a starting point for determining the amount of data needed for AI/ML training. Data availability can be expanded at a low cost using augmentation techniques. Training results can be analyzed using learning curves, but finding the optimal model is not always simple and can require adjustment or replacement.

Leave a Reply