December 22, 2025 by University of Edinburgh

Collected at: https://techxplore.com/news/2025-12-ai-memory-boosts-accuracy.html

Researchers have developed a new way to compress the memory used by AI models to increase their accuracy in complex tasks or help save significant amounts of energy.

Experts from University of Edinburgh and NVIDIA found that large language models (LLMs) using memory eight times smaller than an uncompressed LLM scored better on math, science and coding tests while spending the same amount of time reasoning.

The method can be used in an alternative way to help LLMs respond to more user queries simultaneously, reducing the amount of power needed per task.

As well as energy savings, experts say the improvements could benefit AI systems that are used to solve complicated tasks or in devices that have slow or limited memory, such as smart home devices and wearable technology.

How memory compression improves AI

By “thinking” about more complex hypotheses or exploring more hypotheses concurrently, AI models improve their problem-solving abilities. In practice, this is achieved by generating more reasoning threads—a step-by-step logical process used to solve problems—in text form.

The model’s memory—called the KV cache—which stores the portions of the threads generated, can act as a bottleneck, as its size slows down the generation of reasoning thread outputs during inference—the process by which AI models respond to an input prompt, such as answering a user query.

The more threads there are, and the longer they are, the more memory is required. The larger the memory size used, the longer the LLM takes to retrieve the KV cache from the part of the AI device where it is stored.

Introducing dynamic memory sparsification

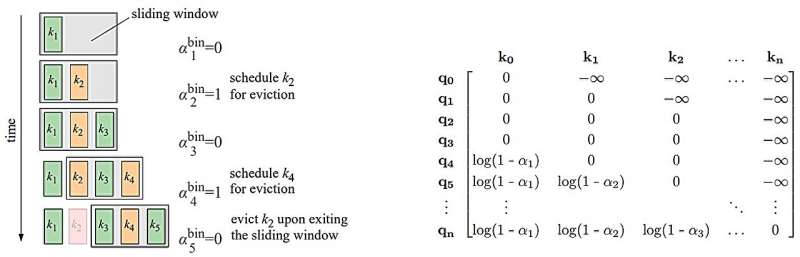

To overcome this, the team developed a method to compress the models’ memory—called Dynamic Memory Sparsification (DMS). Instead of keeping every token—the units of data that an AI model processes—DMS decides which ones are important enough to keep and which ones can be deleted.

There is a slight delay between the time when the decisions to delete tokens using sparsification are made and when they are removed. This gives the model a chance to pass on any valuable information from the evicted tokens to preserved ones.

In managing which tokens to keep and which to discard, DMS lets the AI model “think” in more depth or explore more possible solutions without needing extra computer power.

Testing and results of the new method

The researchers tested DMS on different versions of the AI models Llama and Qwen and compared their performance to models without compression.

The models’ performance was assessed using standardized tests. It was found even with memories compressed to one eighth their original size, LLMs fully retain their original accuracy in difficult tasks while accelerating reasoning compared with non-compressed models.

In the standardized math test AIME 24, which served as the qualifier for the United States Mathematical Olympiad, the compressed models performed twelve points better on average using the same number of KV cache reads to produce an answer.

For GPQA Diamond—a series of complex questions in biology, chemistry and physics authored by Ph.D.-level experts—the models performed over eight points better.

The models were also tested with LiveCode Bench, which measures how well AI models can write code. The compressed models scored on average ten points better than non-compressed models.

Future directions

The findings from this work were presented at the conference NeurIPS.

Dr. Edoardo Ponti, GAIL Fellow and Lecturer in Natural Language Processing at the University’s School of Informatics, said, “In a nutshell, our models can reason faster but with the same quality. Hence, for an equivalent time budget for reasoning, they can explore more and longer reasoning threads. This improves their ability to solve complex problems in math, science, and coding.”

Dr. Ponti and his team will continue to investigate ways large AI systems represent and remember information, making them far more efficient and sustainable.

More information: Paper: Inference-Time Hyper-Scaling with KV Cache Compression

Leave a Reply