October 27, 2025 by Ingrid Fadelli, Phys.org

Collected at: https://techxplore.com/news/2025-10-memristor-ferroelectric-memory-energy-efficient.html

Over the past decades, electronics engineers have developed a wide range of memory devices that can safely and efficiently store increasing amounts of data. However, the different types of devices developed to date come with their own trade-offs, which pose limits on their overall performance and restrict their possible applications.

Researchers at Université Grenoble Alpes (CEA-Leti, CEA List), Université de Bordeaux (CNRS) and Université Paris-Saclay (CNRS) recently developed a new memory device that combines two complementary components typically used individually, known as memristors and ferroelectric capacitors (FeCAPs). This unified memristor-ferroelectric memory, presented in a paper published in Nature Electronics, could be particularly promising for running artificial intelligence (AI) systems that autonomously learn to make increasingly accurate predictions.

“The ‘ideal’ memory would be high-density, non-volatile, capable of non-destructive readout, and offer virtually infinite endurance,” Elisa Vianello, senior author of the paper, told Tech Xplore.

“Unfortunately, such a memory does not yet exist—and it may never be fully achievable. To address this limitation, we realized a new approach was needed—one that could combine the complementary strengths of different memory devices.”

When they examined the components of existing memory solutions, Vianello and her colleagues realized that FeCAPs and memristors share a remarkably similar stack structure, even if their operation relies on very different physical mechanisms. This ultimately inspired them to develop a new memory that integrates the functionalities of both these components within a single stack, which could be advantageous for the energy-efficient training and implementation of AI algorithms.

“A memristor stores information by changing its electrical resistance through the creation and dissolution of a conductive filament that bridges the two electrodes,” explained Vianello.

“Programming these resistance states requires precise current control, which influences both the programming power and the device’s write endurance. In contrast, reading operations involve only a low-voltage, short pulse to determine the stored resistance values.”

A FeCAP, the second component of the hybrid system developed by Vianello and her colleagues, is a memory device based on a ferroelectric material. This device stores information via the reversible polarization of the ferroelectric material, which is switched by an applied electric field.

“Because polarization reversal requires an ultralow displacement current, FeCAPs offer exceptional endurance and extremely low energy consumption during programming,” said Vianello.

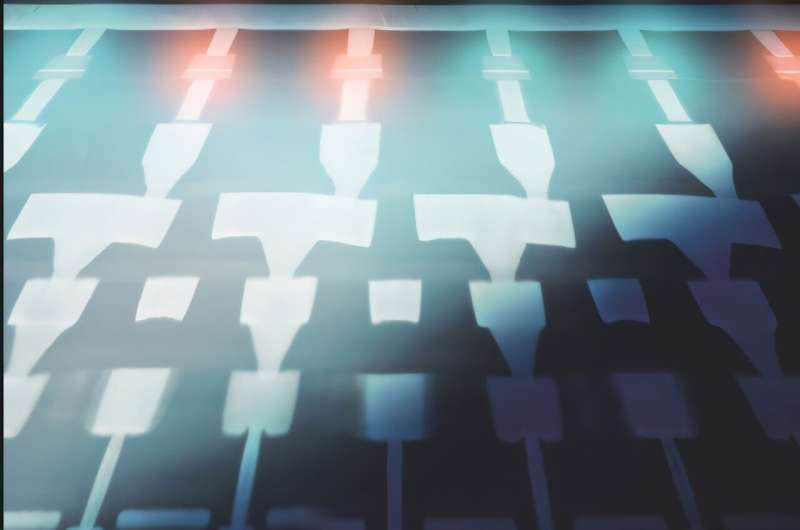

“Our ferroelectric-memristor memory integrates silicon-doped HfO₂ (commonly used in FeCAPs) with a titanium scavenging layer (typically used in memristors). In our design, all devices initially behave as FeCAPs but, thanks to the Ti layer, can be transformed into memristors through an electrical forming operation.”

Essentially, the hybrid memory created by this team of researchers combines the best features of memristors and FeCAP technology. Like memristors, it excels at inference, as it can store analog weights, is energy-efficient during read operations and supports in-memory computing. By integrating FeCAPs, however, it also supports rapid and low-energy updates, which is ideal when training machine learning algorithms.

“We demonstrated a memory technology that combines the functions of memristors and FeCAPs within a single stack,” said Vianello. “This hybrid approach leverages the strengths of both device types, enabling efficient and reliable on-chip training and inference of artificial neural networks.”

This recent work by Vianello and her colleagues could soon inspire other research groups to develop other hybrid data storage solutions that combine memory components that are seemingly very different. In the future, the memory they created could be improved further and used to support the training and implementation of edge AI, a system where AI algorithms run directly on local hardware, instead of relying on remote cloud servers and data centers.

“Many real-world applications require AI systems to continually learn new tasks or adapt to changing inputs without catastrophically forgetting previously acquired knowledge,” added Vianello.

“However, classical deep learning methods tend to overwrite existing parameters with new information. Recently, several new algorithms have been proposed to tackle these challenges. Our next goal is to integrate our memory technology with these emerging approaches, paving the way for systems that can learn continuously and adapt dynamically—much like the human brain.”

More information: Michele Martemucci et al, A ferroelectric–memristor memory for both training and inference, Nature Electronics (2025). DOI: 10.1038/s41928-025-01454-7.

Journal information: Nature Electronics

Leave a Reply