April 29, 2025 by Patricia DeLacey, University of Michigan College of Engineering

Collected at: https://techxplore.com/news/2025-04-enemy-good-deep-cloud.html

A new communication-collective system, OptiReduce, speeds up AI and machine learning training across multiple cloud servers by setting time boundaries rather than waiting for every server to catch up, according to a study led by a University of Michigan researcher.

While some data is lost to timeouts, OptiReduce approximates lost data and reaches target accuracy faster than competitors. The results were presented today at the USENIX Symposium on Networked Systems Design and Implementation in Philadelphia, Pennsylvania.

As the size of AI and machine learning models continues to increase, training requires several servers or nodes to work together in a process called distributed deep learning. When carrying out training within cloud computing centers, congestion and delays come up as multiple workloads are processed at once within the shared environment.

To overcome this barrier, the research team suggests an approach that is analogous to the switch from general-purpose CPUs, which were not able to handle AI and machine learning training, to domain-specific GPUs with higher efficiency and performance in training.

“We have been making the same mistake with communication by using the most general purpose data transportation. What NVIDIA has done for computing, we are trying to do for communication—moving from general purpose to domain-specific to prevent bottlenecks,” said Muhammad Shahbaz, an assistant professor of computer science and engineering at U-M and corresponding author of the study.

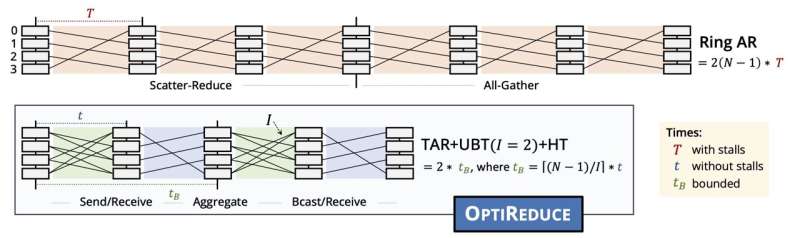

Up to this point, distributed deep learning systems have required perfect, reliable communication between individual servers. This leads to slowdowns at the tail end because the model would wait for all servers to catch up before moving on.

Instead of waiting for stragglers, OptiReduce introduces time limits for server communication and moves on without waiting for every server to complete its task. To respect time boundaries while maximizing useful communication, the limits adaptively shorten during quiet network periods and lengthen during busy periods.

While some information is lost in the process, OptiReduce leverages the resiliency of deep learning systems by using mathematical techniques to approximate the lost data and minimize the impact.

“We’re redefining the computing stack for AI and machine learning by challenging the need for 100% reliability required in traditional workloads. By embracing bounded reliability, machine learning workloads run significantly faster without compromising accuracy,” said Ertza Warraich, a doctoral student of computer science at Purdue University and first author of the study.

The research team tested OptiReduce against existing models within a local virtualized cluster—networked servers that share resources—and a public testbed for shared cloud applications, CloudLab. After training multiple neural network models, they measured how quickly models reached target accuracy, known as time-to-accuracy, and how much data was lost.

OptiReduce outcompeted existing models, achieving a 70% faster time-to-accuracy compared to Gloo, and it was 30% faster compared to NCCL when working in a shared cloud environment.

When testing the limits of how much data could be lost in timeouts, they found models could lose about 5% of the data without sacrificing performance. Larger models—including Llama 4, Mistral 7B, Falcon, Qwen and Gemini—were more resilient to loss while smaller models were more susceptible.

“OptiReduce was a first step toward improving performance and alleviating communication bottlenecks by leveraging the domain-specific properties of machine learning. As a next step, we’re now exploring how to shift from software-based transport to hardware-level transport at the NIC to push toward hundreds of Gigabits per second,” said Shahbaz.

NVIDIA, VMware Research and Feldera also contributed to this research.

More information: Full citation: “OptiReduce: Resilient and tail-optimal AllReduce for distributed deep learning in the cloud,” Ertza Warraich, Omer Shabtai, Khalid Manaa, Shay Vargaftik, Yonatan Piasetzky, Matty Kadosh, Lalith Suresh, and Muhammad Shahbaz, USENIX Symposium on Networked Systems Design and Implementation (2025). www.usenix.org/conference/nsdi … resentation/warraich

Good V I should certainly pronounce, impressed with your site. I had no trouble navigating through all the tabs as well as related info ended up being truly easy to do to access. I recently found what I hoped for before you know it at all. Reasonably unusual. Is likely to appreciate it for those who add forums or something, site theme . a tones way for your client to communicate. Nice task..

Leave a Reply