By Evan Gough, Universe Today January 16, 2025

Collected at: https://scitechdaily.com/the-biggest-simulation-ever-frontier-supercomputer-models-the-universe/

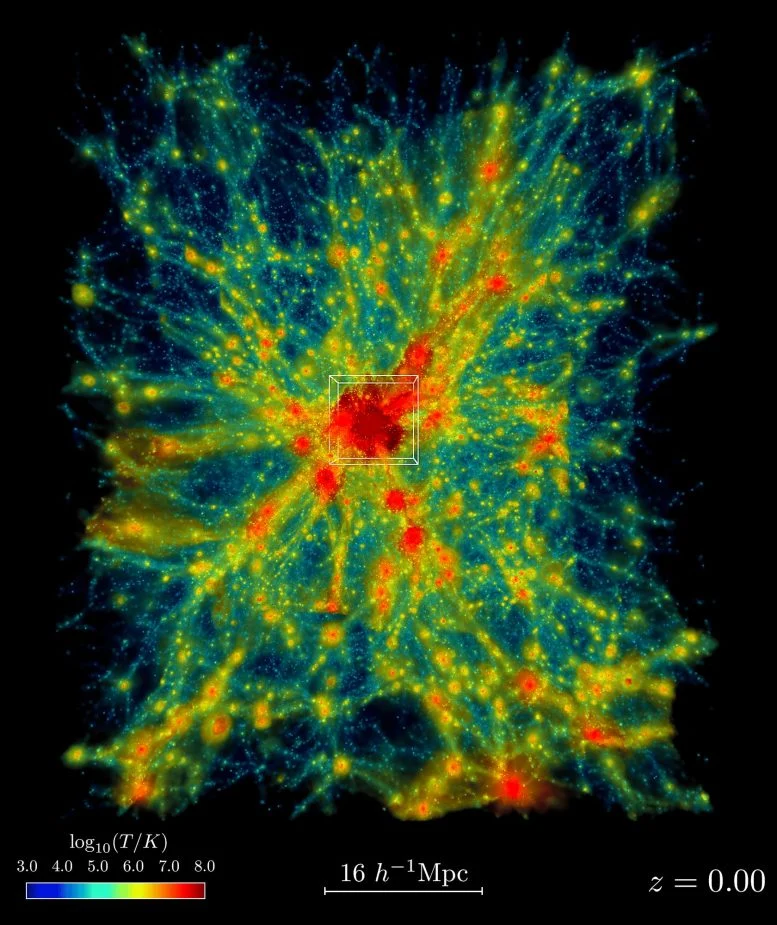

At Argonne National Laboratory, scientists have leveraged the Frontier supercomputer to create an unprecedented simulation of the universe, encompassing a span of 10 billion light years and incorporating complex physics models.

This monumental achievement allows for new insights into galaxy formation and cosmic evolution, showcasing the profound capabilities of exascale computing.

Breakthrough in Universe Simulation

Scientists at the Department of Energy’s Argonne National Laboratory have achieved a groundbreaking milestone by creating the largest astrophysical simulation of the Universe to date. This simulation was made possible by the Frontier supercomputer, which was recently the most powerful in the world. Its scale mirrors the vast surveys conducted by advanced telescopes and observatories, offering unprecedented insights into the cosmos.

Frontier, located at the Oak Ridge National Laboratory in Tennessee, is currently the second-fastest supercomputer globally, surpassed only by El Capitan, which pulled ahead in November 2024. Notably, Frontier is the world’s first exascale supercomputer, a distinction it now shares with El Capitan, both of which represent the cutting edge of computational power.

A New Era in Astrophysics

This record-breaking simulation showcases the immense capabilities of exascale computing. Frontier’s power enables simulations with a level of precision previously unattainable, allowing scientists to model the Universe in extraordinary detail. However, harnessing exascale technology to its full potential requires innovative programming approaches, reflecting the complexity of this next-generation computing frontier.

Frontier is a significant leap in astrophysical simulations. It covers a volume of the Universe that’s 10 billion light years across. It incorporates detailed physics models for dark matter, dark energy, gas dynamics, star formation, and black hole growth. It should provide new insights into some of the fundamental processes in the Universe, such as how galaxies form and how the large-scale structure of the Universe evolves.

Understanding the Components of the Universe

“There are two components in the universe: dark matter—which as far as we know, only interacts gravitationally—and conventional matter, or atomic matter.” said project lead Salman Habib, division director for Computational Sciences at Argonne.

“So, if we want to know what the universe is up to, we need to simulate both of these things: gravity as well as all the other physics including hot gas, and the formation of stars, black holes, and galaxies,” he said. “The astrophysical ‘kitchen sink’ so to speak. These simulations are what we call cosmological hydrodynamics simulations.”

The Mechanics of Cosmological Simulations

Cosmological hydrodynamics simulations combine cosmology with hydrodynamics and allow astronomers to examine the complex interrelationships between gravity and things like gas dynamics and stellar processes that have shaped and continue to shape our Universe. They can only be conducted with supercomputers because of the level of complexity and the vast number of numerical equations and calculations involved.

Energy Demands and Scientific Payoff

The sheer amount of energy needed for Frontier to perform these simulations is staggering. It consumes about 21 MW of electricity, enough to power about 15,000 single-family homes in the US. But the payoff is equally as impressive.

“For example, if we were to simulate a large chunk of the universe surveyed by one of the big telescopes such as the Rubin Observatory in Chile, you’re talking about looking at huge chunks of time — billions of years of expansion,” Habib said. “Until recently, we couldn’t even imagine doing such a large simulation like that except in the gravity-only approximation.”

The Power of Exascale Computing

“It’s not only the sheer size of the physical domain, which is necessary to make direct comparison to modern survey observations enabled by exascale computing,” said Bronson Messer, Oak Ridge Leadership Computing Facility director of science. “It’s also the added physical realism of including the baryons and all the other dynamic physics that makes this simulation a true tour de force for Frontier.”

Frontier simulates more than just the Universe. In June, researchers working with it achieved another milestone. They simulated a system of 466 billion atoms in a simulation of water. That was the largest system ever modeled and more than 400 times larger than its closest competition. Since water is a primary component of cells, Frontier is paving the way for an eventual simulation of a living cell.

Frontier promises to make advancements in multiple other areas as well, including nuclear fission and fusion and large-scale energy transmission systems. It’s also been used to generate a quantum molecular dynamics simulation that’s 1,000 times greater in size and speed than any of its predecessors. It also has applications in modeling diseases, developing new drugs, better batteries, better materials including concrete, and predicting and mitigating climate change.

Combining Simulations with Observations

Astrophysical/cosmological simulations like Frontier’s are powerful when they’re combined with observations. Scientists can use simulations to test theoretical models compared to observational data. Changing initial conditions and parameters in the simulations lets researchers see how different factors shape outcomes. It’s an iterative process that allows scientists to update their models by identifying discrepancies between observations and simulations.

Frontier’s huge simulation is just one example of how supercomputers and AI are taking on a larger role in astronomy and astrophysics. Modern astronomy generates massive amounts of data, and requires powerful tools to manage. Our theories of cosmology are based on larger and larger datasets that require massive computing power to simulate.

Frontier has already been superseded by El Capitan, another exascale supercomputer at the Lawrence Livermore National Laboratory (LLNL). However, El Capitan is focused on managing the nation’s nuclear stockpile according to the LLNL.

Adapted from an article originally published on Universe Today.

Leave a Reply